Google’s new quantum processor is here, but what does it really mean?

December 17, 2024

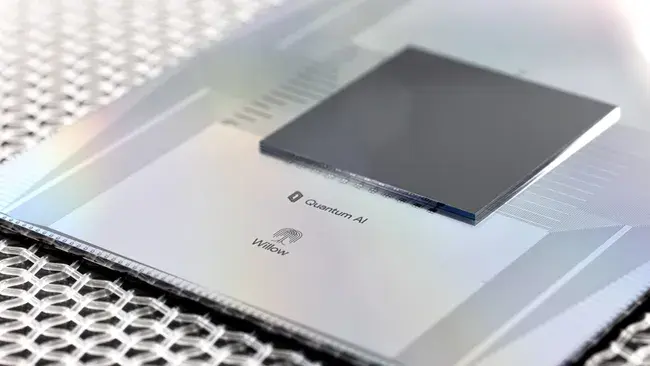

Google has just announced a significant breakthrough in quantum computing technology with the introduction of its Willow processor, the successor to the already revolutionary Sycamore. But… what are its implications?

As a disclaimer, before diving into the details, the purpose of this post is not to explain how quantum computing works — that would require a much longer article, and there are already many better ones than I could write — so I will focus on what, in my opinion, this breakthrough represents in itself.

Primarily, Willow, Google’s new quantum processor, represents three major achievements:

1. A very significant increase in the number of physical qubits (I will explain the italics later),

2. A significant improvement (by a factor of 5) in quantum coherence times for the type of technology used,

3. A major milestone in error correction improvement.

Why are these three factors important?

The number of qubits indicates, simplifying a bit, the “size” or “complexity” of the problem that the quantum computer can solve. Current qubit volumes are insignificant for practical applications, as it is estimated that to solve simple problems like quantum chemistry simulations or quantum optimization and machine learning, we would need a minimum of 1,000 to 10,000 logical qubits (I’ll get back to the italics later), or even up to 1 million of them.

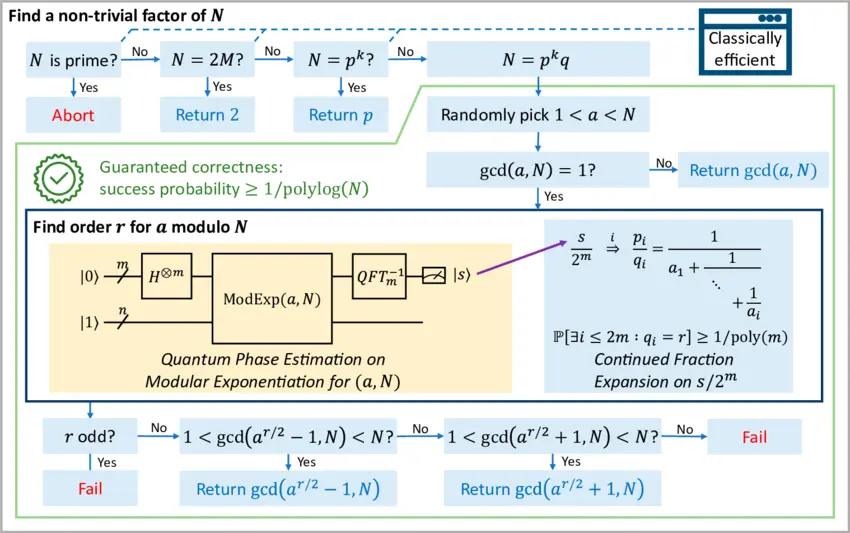

If we want to tackle problems like implementing Shor’s algorithm to break an RSA-2048 encryption system, we would be talking about around 20 million logical qubits.

A formally certified end-to-end implementation of Shor's factorization algorithm - Yuxiang Peng, Kesha Hietala, Runzhou Tao, Xiaodi Wu - ResearchGate

A formally certified end-to-end implementation of Shor's factorization algorithm - Yuxiang Peng, Kesha Hietala, Runzhou Tao, Xiaodi Wu - ResearchGate

What about that problem that would take trillions of years for a classical computer to solve, but Willow solved in just seconds?

Without going into too much detail, this refers to random circuit sampling, a task designed to evaluate the capability of quantum processors by generating probability distributions that are difficult to emulate with classical computers. In reality, it is a mere tool to evaluate the performance of quantum processors and, in passing, demonstrate their superiority over classical ones, but without direct practical application in real-world problems.

That is, a very useful metric for engineers in the field and for marketing managers of the companies involved, but without real impact on the practical utility of these systems for actual use.

What about error correction?

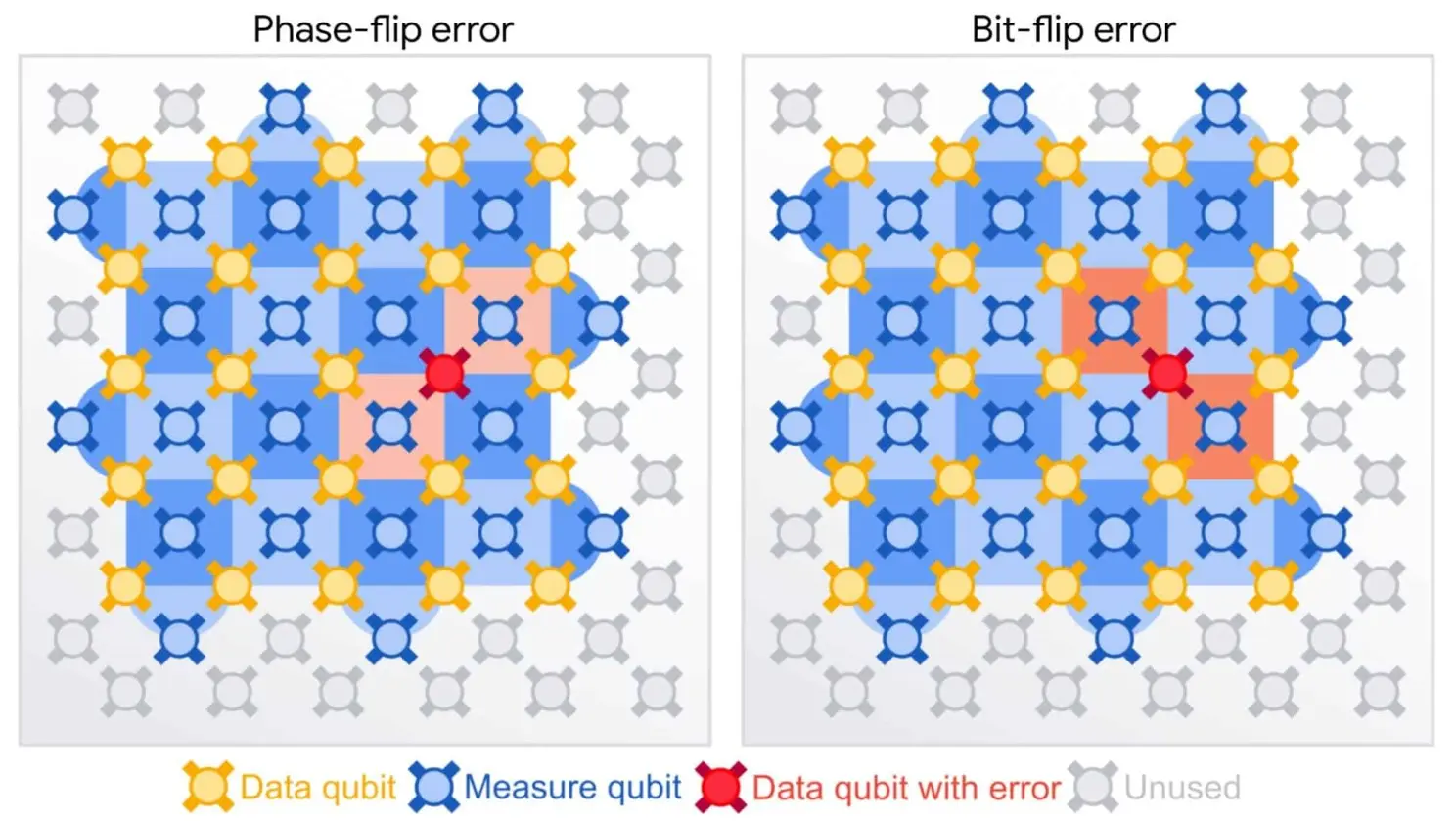

This is where things are more promising: Error correction is another major issue we face with quantum computing, as physical qubits are extremely sensitive to environmental disturbances, leading to decoherence (loss of quantum state) and other types of noise.

To combat this, error correction techniques are used, which distribute quantum information across multiple physical qubits. That’s why my previous italics: the number of physical qubits is greater than the number of logical qubits, as several of the former are needed to form one of the latter, and it is the latter that matter to solve calculations.

In previous approaches to the problem, this method required a considerable amount of resources, and in many cases, the error rate did not decrease sufficiently as the number of physical qubits increased. Willow’s breakthrough is that it demonstrates a way to achieve exponential error reduction by increasing the number of physical qubits dedicated to each logical qubit, reaching a “threshold” that indicates the system becomes more robust as its size increases (which did not happen with previous approaches).

This allows logical qubits to maintain their coherence for longer periods and with greater reliability, which is vital for developing practical and scalable quantum computers. In any case, Willow has encountered another limit in this exponential growth, which Google claims to be working on resolving.

Breakthrough in quantum error correction could lead to large-scale quantum computers (Physics World)

Breakthrough in quantum error correction could lead to large-scale quantum computers (Physics World)

What is this coherence time all about?

Coherence time is important because it represents the maximum time we have to complete any calculation before the quantum state degrades and the operation we’re performing vanishes halfway through. And the 100 microseconds that Willow’s logical qubits can sustain are insufficient to run complex quantum algorithms, which may require thousands or millions of quantum gates, each with its processing time, slower than binary operations in classical computers.

To make matters worse, this processing time for the gates must be added to the non-negligible overhead time required for error correction, known as “error correction overhead,” which increases the overall computation time.

So, what coherence times should we aim for in order to perform practical calculations? Well, depending on the problem, it would range from milliseconds — one order of magnitude above the current time — for basic chemical simulations to 10 seconds — five orders of magnitude, 100,000 times more — if we want to run Shor’s algorithm — not counting the millions of physical qubits required for it. We are making progress, but there’s still a long way to go.

So, what else is interesting about Willow?

Among other things, the manufacturing process. While this processor still requires cryogenic temperatures — on the order of millikelvins, extremely close to absolute zero — the manufacturing process uses well-developed semiconductor technologies, which greatly facilitates scalability and, hypothetically, could allow us to manufacture these processors with technologies we already know and control quite well, reducing associated costs and complexities.

![]()

How to make supercomputing circuits out of a semiconductor (Tahan Research)

Other quantum computing technologies, like ion traps, are much more complex to manufacture and less scalable, and the speeds of their logic gates are significantly slower — which at least partially negates the advantage of their longer coherence times. And if we talk about technologies like quantum photonics or topological qubits, which Microsoft has recently made headlines about, we’re dealing with even less mature and much less scalable technologies.

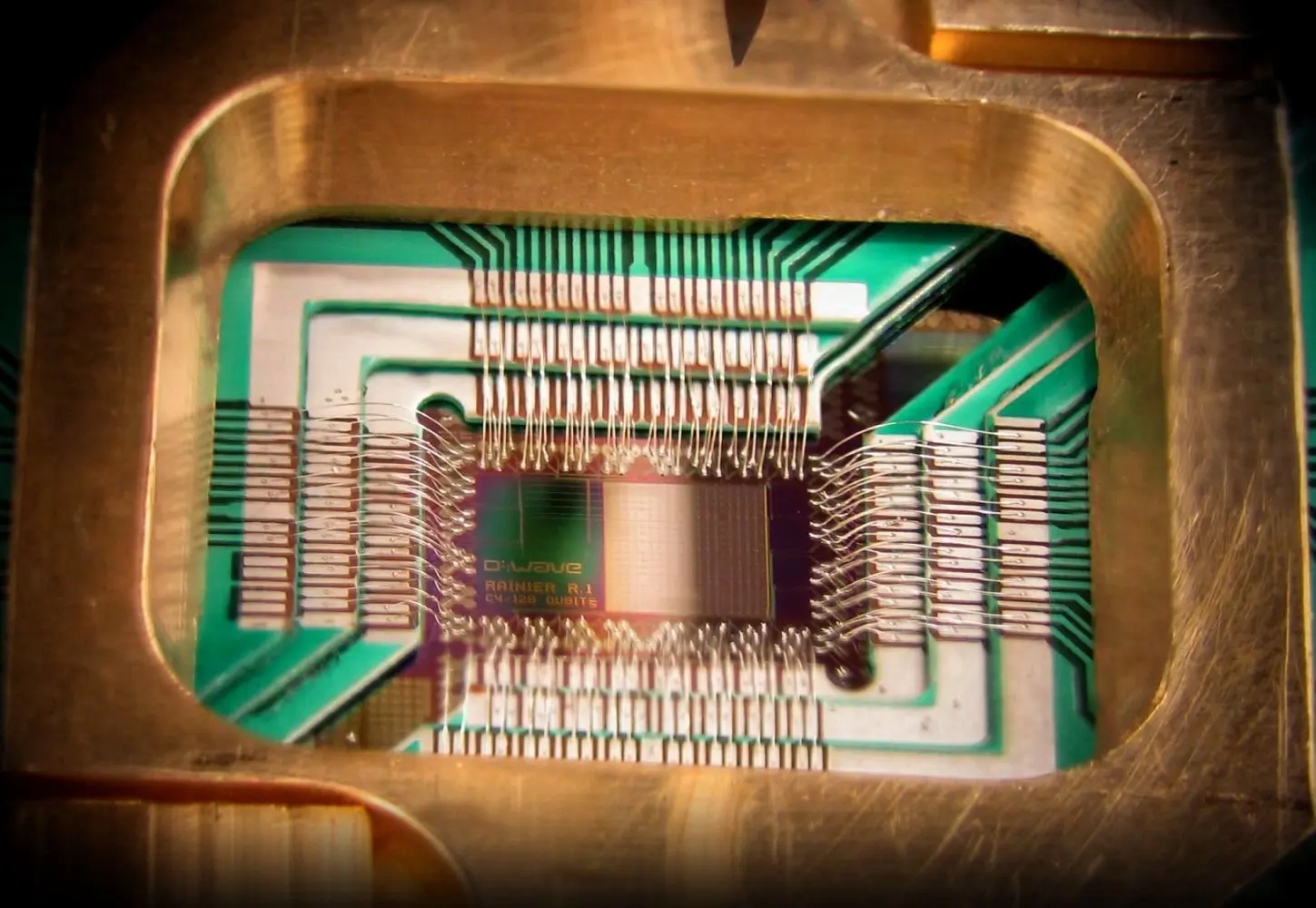

Special mention goes to the quantum annealing technology used by systems like D-Wave, which, although it already has practical applications, is particularly limited to fields like combinatorial optimization or parameter tuning for machine learning, achieving interesting results in logistics planning, material design, and financial optimization.

However, quantum annealing has the drawback of not being a universal computing system: it cannot run any quantum algorithm like Shor’s or Grover’s, and may not always find the optimal solution due to its heuristic nature, requiring a somewhat “artisanal” approach — my apologies to the experts — to its solution methods.

D-Wave One Quantum Annealing chip - Wikipedia/D-Wave

D-Wave One Quantum Annealing chip - Wikipedia/D-Wave

So, is quantum computing still not practical?

We can say, without fear of being wrong, that this technology still has a lot of maturation ahead, but that doesn’t mean that the advancements made so far haven’t already yielded results, both in basic science and applied engineering in its design and construction, as well as in solving practical problems, like those mentioned in the case of Quantum Annealing.

Moreover, the mathematics involved in exploring these systems has already provided us with new problem-solving methods, such as the quantum inspired algorithms developed by our partner Multiverse Computing, with whom we collaborate at SNGULAR, capable of solving classical problems or those related to machine learning on classical computers much faster or more practically.

In summary, as in any frontier field, each advancement in this area is allowing us to open new avenues of research and knowledge that expand our scientific and technological capacity. But, unfortunately, there are still years ahead before we can have deep philosophical discussions with Artificial Intelligences based on quantum computing. However, this Google announcement is undoubtedly a giant leap and places the Mountain View company at the forefront of a revolution that promises to change the world in ways that are hard to imagine today.

Our latest news

Interested in learning more about how we are constantly adapting to the new digital frontier?

Tech Insight

April 2, 2025

🧐 What is Mockoon and why should you use it?

Insight

February 18, 2025

The Transformative Power of AI in Health and Pharma

Insight

January 23, 2025

Scrum roles and responsibilities: a step towards transparency and engagement

Tech Insight

January 13, 2025

How to bring your application closer to everyone