LangSmith. A review of how to make interaction with LLM prompts easier

November 3, 2023

The evolution of Large Language Models (LLMs) continues at full speed and not long ago, among all the information being shared on networks such as the article we published by the AI Team. We came across LangSmith, a platform developed by LangChain to create and share prompts, build LLM applications and put them into production.

LangSmith follows the open source framework guidelines of the company that created it, but it is currently in private beta. To join the waitlist, all you need to do is create an account with your company email address.

You can also create an organization in which you can invite limited multiple users and manage your use cases. That is what we have done at SNGULAR and now we are going to show you the different functionalities, advantages and disadvantages of this tool. Creating and sharing prompts has never been so easy. Here we go!

LangSmith can be used from the website or you can integrate it into your code, as indicated in the documentation. Although this documentation could be improved to be more user-friendly to the Prompt Specialists, it can help us get started with the platform.

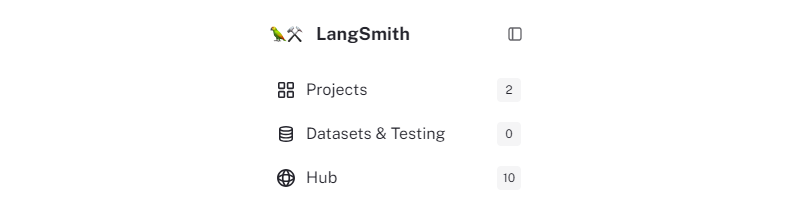

From the sidebar we can access the most important sections where we are going to work: our projects, the datasets we manage and test, and the Hub, where the prompts shared by the community are hosted.

Figure. Sidebar 1.

Figure. Sidebar 1.

Also, at the foot of the sidebar you can find the management of organizations and accounts. From there, we can configure our API Keys, which will be important when making calls to the models throughout the connection with third-party services.

Figure. Sidebar 2.

Figure. Sidebar 2.

MAIN FUNCTIONALITIES AND ADVANTAGES

From the page overview, we can access any of the above-mentioned sections. The most relevant one for the creation and sharing of prompts is the Hub (or community). However, we will see some particularities of the projects and the datasets-testing sections, as we can consider them as advanced functions.

Regarding the creation of projects in LangSmith, this is a very simple task and allows you to upload a test dataset to evaluate your prompts. The dataset may have three different formats: key-value format (the default one), chat format (with system-role and user-role inputs), and LLM format (it directly maps the user's questions to the assistant's answers).

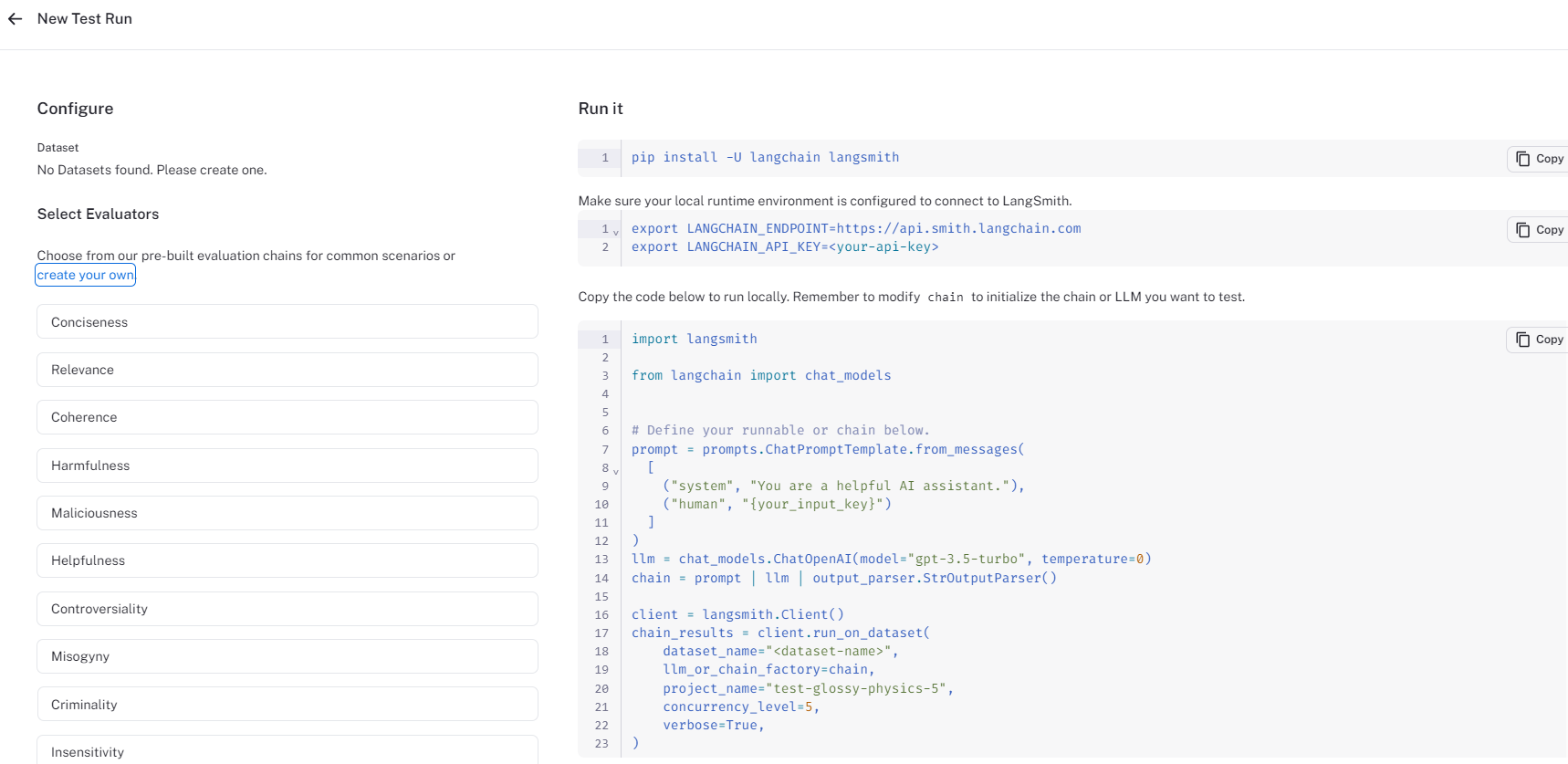

Besides, if you want to create a dataset and test it from the tool, LangSmith provides you with the code to run it and the possibility to select some parameters or evaluators to apply to the dataset.

Figure. Evaluators Dataset.

Figure. Evaluators Dataset.

The Hub, on the other hand, is a space for LangSmith users to search, save, share and test their own prompts or those created by the community. It is especially useful for benchmarking. The variety of filters (use cases, type, language, models, etc.) in the search bar makes it easier for the user to find prompts according to different interests. Moreover, the similarity to a repository layout makes it particulary attractive.

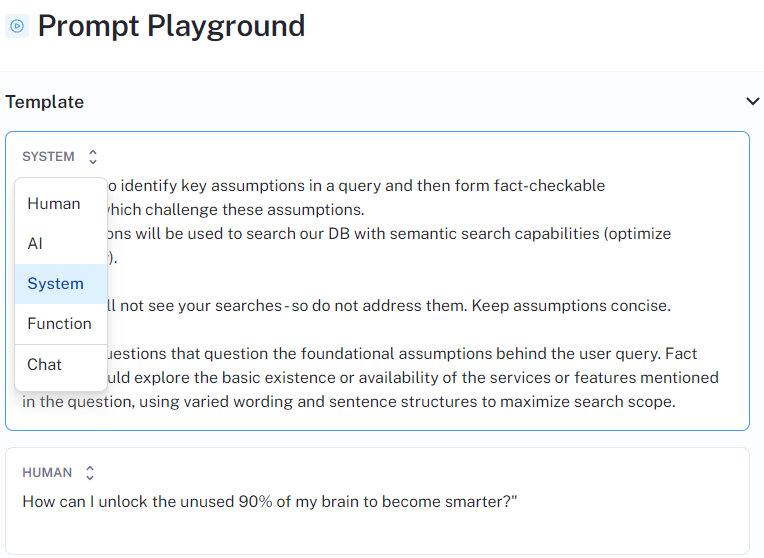

One of the most outstanding features is the Playground within each Chat Prompt Template, where we can try prompts from other users. It is interesting that the prompts incorporate a Readme file as documentation of the usability of the prompt.

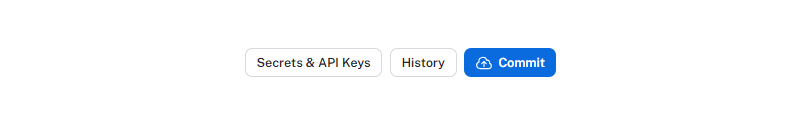

Likewise, we can check our prompts or create a new one in My prompts. Whether we have done a fork from an existing prompt or created a prompt from scratch, one of the actions we can take is to commit the version we are working on. In this way, it will be saved so that it can be registered and reviewed by the community or your working group.

Besides, we can save versions as examples within the prompt template or, even further, share them publicly with the community or privately with our workgroup.

Figure. History commits.

Figure. History commits.

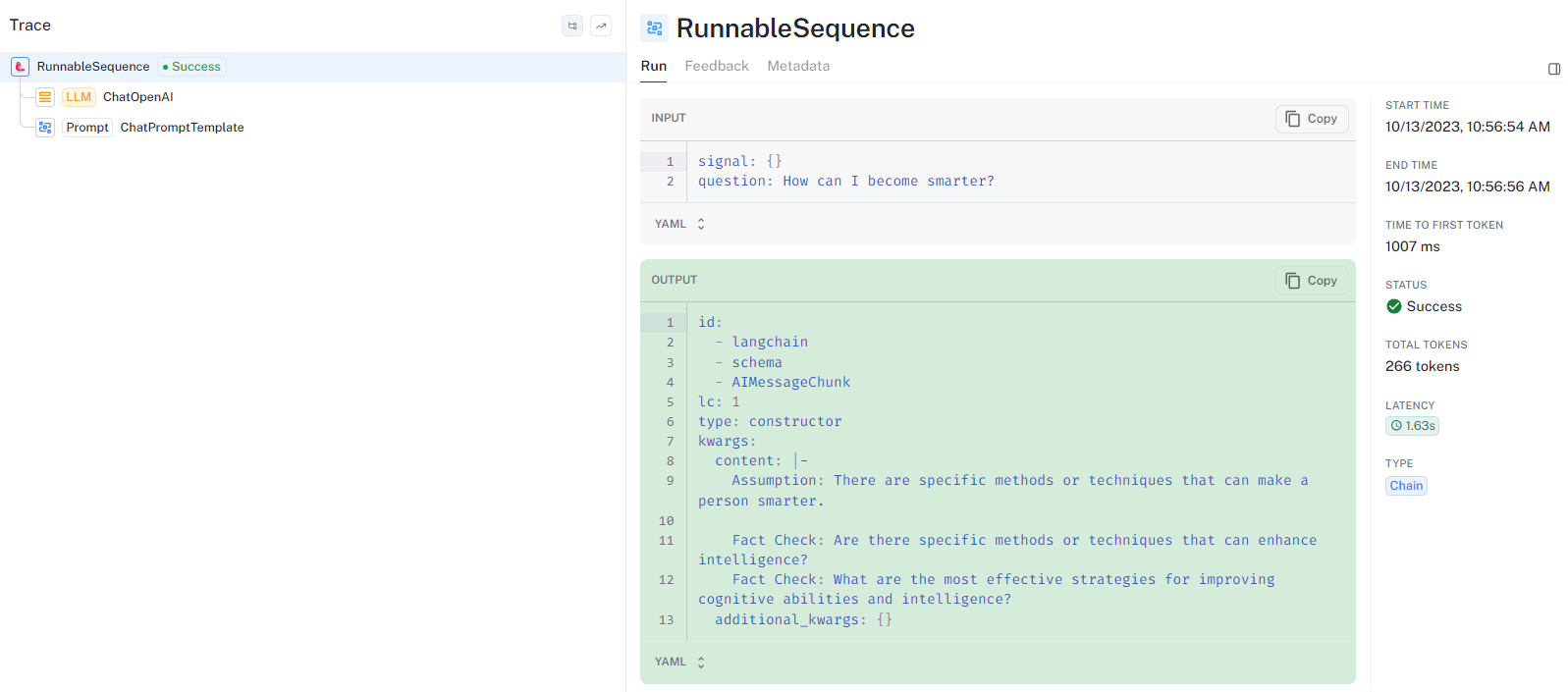

Finally, we can see the History of a prompt to trace its runs, which is awesome!

In the runs we are able to see the template, the variables used, the parameters selected for making the call to the model and the output. And what is more, we can also see the token consumption and the latency of the call.

Figure. Runs.

Figure. Runs.

However, this platform in beta version still has some improvements and shortcomings ahead that we will point out (and others that will be discovered) in the next section.

SHORTCOMINGS AND DISADVANTAGES

As we have seen, there are a few noteworthy features in this tool developed by LangChain. Nevertheless, the beta version has some limitations we must mention.

Firstly, even the connection with third-party services is a high-power feature of this tool, it is also limited by the fact that it depends on the providers. In this way, only a model from available providers (OpenAI, Azure and Proxy) can be called and only if you have the credentials. The version of the model is a limiter, as well as the number of tokens per output, which sometimes forces the segmentation of the call. This fact may cause the loss of context and inconsistent responses.

It is true that the model has context memory, but this context must be created manually: while in other models we have a chat view, in this case, its resemblance to a repository format makes it a tedious process in which the user has to copy the AI response and paste it as a new input that we chain to our prompt (and we have to select the AI option in the role options).

Figure. Role options.

Figure. Role options.

The feature of tracing is really powerful, but we cannot trace everything. One of the most pointed out shortcomings of LangSmith is that it is not possible to track the commits or changes per user within a workgroup, which is cumbersome for organizations that have several members working at the same time and at the same prompt. Forking is recommended in these cases, but it would still be costly to identify which version corresponds to each user.

As for the layout, the parameters do not have a visible drop-down and, in our opinion, the parameters display order could be improved.

Lastly, but importantly, the documentation is rather brief and could be a little bit more intuitive for non-technical people such as Computational Linguists or Prompt Specialists, helping understand the platform in a better way. The less intuitive functionalities come across as you test them and this is something that could be improved.

CONCLUSIONS

We have come across LangSmith, which we consider a very interesting application. The idea of the repository format with the Hub or community and the tracing feature takes a step forward in the creation, versioning, publication and standardization of prompt modeling. Besides, the functionality that connects the platform to third-party services is quite powerful, although it may have some shortcomings.

Even so, we are aware of the limitations of this beta version of LangSmith and it has been a good and enriching experience to try it out in order to continue exploring the world of prompt engineering.

Co-authors

Luca de Filippis

Computational Linguist & Prompt Specialist

https://www.linkedin.com/in/lucadefilippis/

Ana García Toro

Computational Linguist & Prompt Specialist

https://www.linkedin.com/in/ana-garcia-toro/

Virginia Cinquegrani

Computational Linguist & Prompt Specialist

Our latest news

Interested in learning more about how we are constantly adapting to the new digital frontier?

Insight

December 12, 2023

Beyond NVIDIA: Tech Giants Innovate in AI Hardware for 2024

Insight

December 10, 2023

When 5G and AI become indistinguishable: NVIDIA's approach

Insight

July 12, 2023

Envisioning a sustainable future guided by the technology sector